Ubuntu as hypervisor10. Sep '17

Introduction

Virtualization enables better utilization of hardware by making it possible to run multiple operating systems on same computer. Even in a small company you might need to run Active Directory on Windows Server and WordPress on Ubuntu server, but purchasing two physical servers for each application is suboptimal.

The problem with virtualization is that it can refer to several different approaches and nuances. In classic terms virtualization usually refers to emulation of a whole computer with all accompanying hardware - that means CPU, memory management unit, storage, network, timers etc.

For example on IBM S390 processor and memory virtualization was supported from the start whereas on Intel x86 CPU and memory virtualization were added later incrementally.

VirtualBox and similar products made it possible to reach near-native speeds by the means of dynamic translation even on processors without hardware support for CPU or memory virtualization, but breakthrough for x86 was achieved in 2005, when Intel and AMD added hardware support.

In case of server consolidation the deduplication of memory and storage is important. For example Linux supports kernel samepage merging since version 2009. The kernel regularly scans the memory and calculates checksums for memory blocks. Memory blocks with identical checksums are merged and marked as copy-on-write. Running identical guest operating systems on such host yields significant memory usage drop regardless of virtualization software used. To enable deduplication for storage an approrpiate filesystem should be used. ZFS supports inline deduplication if enabled, this means that once a block is written to filesystem the kernel looks up the checksum of the block from RAM.

QEMU

QEMU is a emulator created by Fabrice Bellard and at this point it supports x86, MIPS, Sun SPARC, ARM, PowerPC and several other architectures.

QEMU supports a wide range of hardware

KVM

With KVM project CPU and memory virtualization support was added to Linux as a kernel module, hence the name kernel virtual machine. Additionally QEMU was patched to take advantage of the newly added functionality:

KVM essentially turns Linux into ring -1 hypervisor

Under KVM unmodified Windows can be booted without significant performance loss. To make sure KVM works as expected check that kvm-intel or kvm-amd appears in lsmod, it might be that virtualization extensions are disabled in BIOS or if BIOS battery is dead and motherboard keeps forgetting the settings.

To run local virtual machine with 1GB of RAM and dual core CPU on Ubuntu:

apt install qemu-kvm

truncate -s 20G hdd.bin

qemu-kvm -m 1024 -smp 2 \

-hda hdd.bin \

-cdrom ubuntu-mate-16.04.2-desktop-amd64.isoBy default QEMU emulates IDE controller for storage and Intel network interface card (e1000). QEMU also takes care of emulating a router with DHCP server, so the virtual machine is assigned an IP address of 10.0.2.15 and the router sits at 10.0.2.2. The TCP and UDP network traffic generated by the guest OS appears as if it was generated the QEMU process, note that with user-mode networking ping is not available.

For more complex networking scenarios TAP interface can be used, in which case a virtual network interface is created in the host and guest's network traffic appears on that interface. This can be used to talk to the host or in conjuction with bridges can be used to expose virtual machines's traffic to external network interfaces.

Paravirtualization

Paravirtualization is a technique which allows significant performance boost for virtualized operating systems which are aware of running on virtualized hardware. Paravirtualization usually covers storage and networking, but paravirtualization could also refer to memory ballooning, which means that the guest OS marks unused memory blocks and the host OS can reclaim those blocks.

In case of paravirtualization the guest operating system is presented with hardware optimized for virtualization which in real life does not exist. In many cases first guest OS is installed as usual presenting let's say standard SATA controller and emulated Intel network card. Once guest additions package provided by the virtualization software vendor is installed the hypervisor swaps out the hardware presented to the guest OS. In case of VMware for example VMnet is presented, under KVM virtio network device by Red Hat is presented although Red Hat has never developed or manufactured any hardware.

The virtio paravirtualization framework is now used for storage and networking and it is out of the box supported under Linux and BSD guests, this means no additional drivers need to be installed for KVM based virtual machines. Microsoft Hyper-V and VMware use similar but different approach, but are not virtio compatible. Currently Linux guests should work out of the box under Hyper-V and ESXi.

To enable network, storage and video output paravirtualization for KVM based virtual machine started from command line:

qemu-kvm -m 1024 -smp 2 -cpu host -boot menu=on \

-drive file=ubuntu-mate-16.04.2-desktop-amd64.iso,if=virtio,media=cdrom \

-drive file=hdd.bin,format=raw,if=virtio,discard=unmap \

-net nic,vlan=42,model=virtio -net user,vlan=42 \

-balloon virtio \

-vga virtioIn the past paravirtualization also referred to running modified guest operating systems on hardware that didn't support for example CPU or memory virtualization. For example Microsoft created a modified version of Windows suitable for running on Xen hypervisor.

Containers

LXC (Linux Containers) is essentially OpenVZ successor and both of them are similar to BSD Jails and Solaris Zones. Even Microsoft has added containers to Windows Server. Docker used LXC in the past but now has moved to it's own direction whilst making use of the same kernel infrastructure.

In case of containers the root filesystems are split, that is folders which contain OS files are separate, but the kernel which is used by the containers is same and hence shared. Using control groups allows separation of processes and network interfaces.

Kernel is shared, but root filesystems are separated

In case of LXC memory or disk space is not reserved for the container, keeping the resource usage optimal accross the containers.

Process tree for such setup looks approximately something like following:

systemd

├─acpid

├─agetty --noclear tty1 linux

├─atd -f

├─cron -f

├─dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

├─dhclient -v -pf /run/dhclient.lxcbr0.pid -lf /var/lib/dhcp/dhclient.lxcbr0.leases lxcbr0

├─lxc-start -d -n ubuntu-trusty-test

│ └─init

│ ├─dbus-daemon --system --fork

│ ├─dhclient -1 -v -pf /run/dhclient.eth0.pid -lf /var/lib/dhcp/dhclient.eth0.leases eth0

│ ├─getty -8 38400 console

│ ├─cron

│ ├─sshd -D

│ ├─systemd-logind

│ ├─systemd-udevd --daemon

│ ├─upstart-file-br --daemon

│ ├─upstart-socket- --daemon

│ └─upstart-udev-br --daemon

├─lxc-start -g debian-wheezy-test

│ └─systemd

│ ├─dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

│ ├─dhclient -v -pf /run/dhclient.eth0.pid -lf /var/lib/dhcp/dhclient.eth0.leases eth0

│ ├─agetty --noclear -s console 115200 38400 9600

│ ├─cron

│ ├─sshd -D

│ ├─systemd-journal

│ ├─systemd-logind

│ └─systemd-udevd

├─lxc-start -d -n debian-jessie-test

│ └─systemd

│ ├─dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

│ ├─dhclient -v -pf /run/dhclient.eth0.pid -lf /var/lib/dhcp/dhclient.eth0.leases eth0

│ ├─sshd -D

│ ├─systemd-journal

│ └─systemd-logind

├─sshd -D

├─systemd-journal

├─systemd-logind

└─systemd-udevdHere it is seen that each container has it's own virtual eth0 interface and DHCP client has been started in each container. Since every container appears on the network with it's distinct IP address, connecting to a container is as easy as installing OpenSSH server in the container.

Note that containers have security implications - kernel vulnerabilities can make it possible to hijack the host machine. Additionally each syscall needs to be aware whether any namespace restrictions apply. For example as of this writing Btrfs syscalls haven't been refactored to accommodate filesystem namespacing, executing following command in an LXC container lists the subvolumes of the host machine as well, even though you would assume it wouldn't. Combining kernel vulnerability with short-sightedness of some kernel module might have disasterous consequences.

Foreign architecture containers

User-mode emulation of QEMU allows executing binaries compiled for foreign CPU architectures under Linux and Darwin/Mac OS X. Note that in case of user-mode emulation only the code that runs in userspace is translated, but syscalls which invoke kernelspace code are not. This means that emulated architectures are slower than native binaries, but not as slow as emulating the whole computer. This for example enables armhf development on x86-64.

binfmt-support allows Linux to transparently execute binaries of foreign architecture

Static binaries can be executed with either enabling the execute bit on the binary or supplying the binary as the argument for corresponding qemu command, eg qemu-arm-static. With dynamically linked programs the situation is stickier because the program will attempt to look up dependand shared libraries, so using a LXC container is the easiest way to run dynamically linked foreign programs:

apt install qemu-user-static binfmt-support

lxc-create -n fedora-arm-test -t download -- -d fedora -r 25 -a armhf

cp /usr/bin/qemu-arm-static /var/lib/lxc/fedora-arm-test/rootfs/usr/bin/

lxc-start -d -n fedora-arm-test

lxc-attach -n fedora-arm-testSimilarly it should be possible to run kfreebsd flavor of Debian or Ubuntu under FreeBSD Jail.

libvirt

libvirt is a framework for managing hypervisors of different sorts - VirtualBox, KVM, ESXi, LXC, Xen, bhyve etc. It provides a daemon for creating, managing and deleting virtual machines and API-s to talk that daemon over SSH connection.

To turn your clean Ubuntu server installation to remotely managed KVM hypervisor:

apt install qemu-kvm libvirt-bin openssh-server bridge-utils vlanTo connect virtual machines with eachother and to the external world, reconfigure /etc/network/intefaces something similar to following:

# Management network to robotics club, replace address with unused IP

auto br-mgmt

iface br-mgmt inet static

address 192.168.12.4

netmask 255.255.255.0

gateway 192.168.12.1

dns-nameserver 192.168.12.1

bridge_ports enp3s0

# Bridge to connect virtual machines to public internet,

# exposed on one of the NIC-s on the physical machine

auto br-wan

iface br-wan inet static

address 0.0.0.0

bridge_ports enp5s0

# Internal network to connect virtual machines to eachother,

# not exposed on any NIC on the physical machine

auto br-lan

iface br-lan inet static

address 0.0.0.0

bridge_ports noneSet up public key based root access from your laptop to the machine and use virt-manager to connect to it. In fact you can use same virt-manager instance on your laptop to connect to several hypervisor machines:

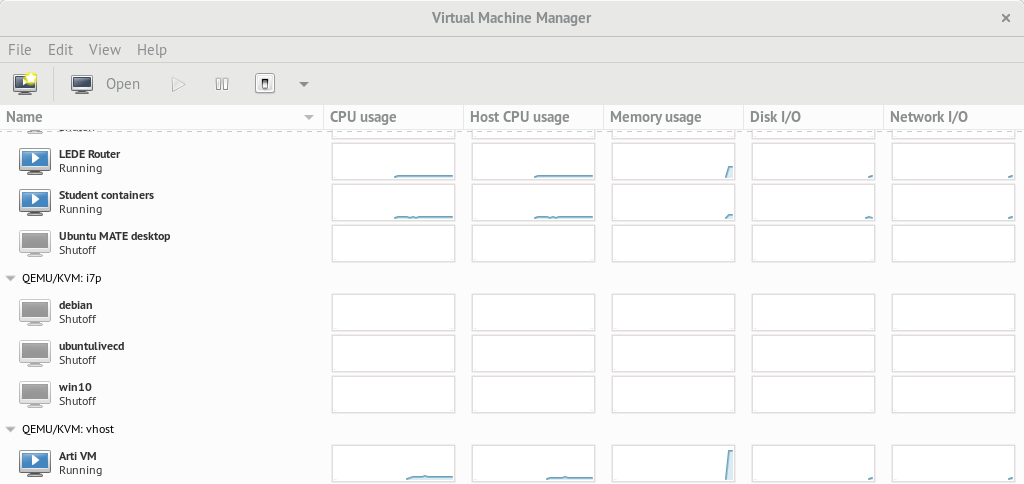

virt-manager connected to three hypervisors

To get best out of the storage performance add virtio SCSI controller and switch harddisk interfaces from SATA to SCSI. More tweaking is required to reclaim free disk space in the host and have optimal performance for SSD-s - see more information here. For each network interface select virtio as model. Note that on multi CPU installations it is also important to have NUMA configured correctly.